While generative AI offers significant potential benefits for enterprises, successful implementation requires strategic planning and execution. Many organizations rush into adoption without a clear strategy, leading to a poor return on investment and increased risk.

This post explores common mistakes enterprises make when implementing generative AI solution and offers guidance on how to avoid them.

Let’s break down the problem — and the solution.

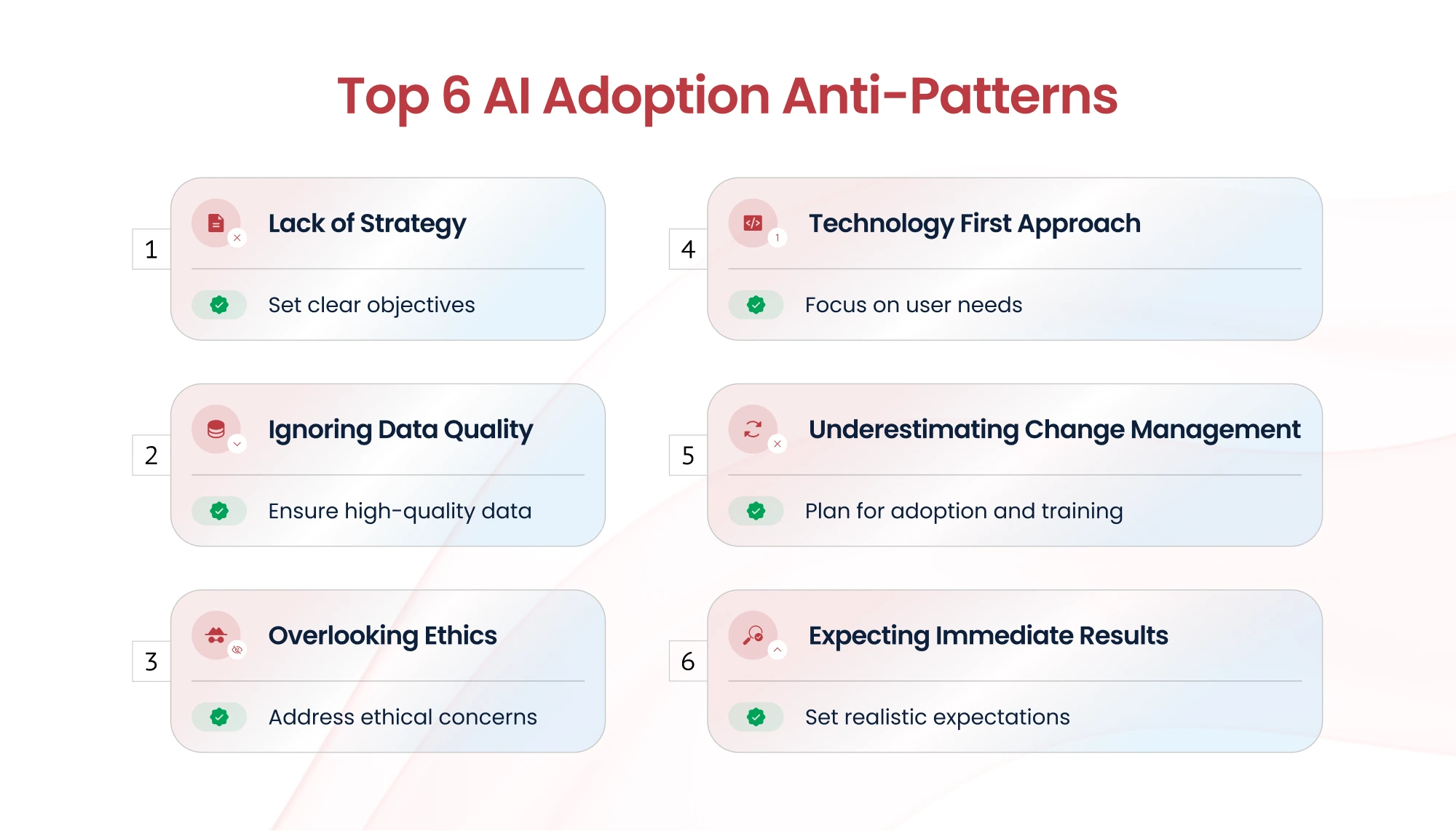

Common Anti-Patterns in AI Adoption

Lack of Clear Strategy and Objectives

One common anti-pattern in AI adoption is the lack of a clear strategy and well-defined objectives. Organizations often rush to implement AI solutions without fully understanding the business problems they aim to solve or the value AI can realistically deliver. This reactive approach leads to fragmented initiatives, misaligned expectations, and wasted resources. Without a strategic framework that aligns AI projects with measurable business goals, companies risk deploying experimental tools that never scale, generating isolated insights that fail to drive action, or overinvesting in hype-driven technologies with little ROI. A successful AI strategy must begin with a clear understanding of organizational priorities, data readiness, and long-term impact—turning AI from a buzzword into a driver of sustainable value.

How to Avoid

- Define specific, measurable, achievable, relevant, and time-bound (SMART) objectives.

- Develop a comprehensive Gen AI strategy aligned with business goals.

- Identify use cases with clear business value.

Technology-First Approach

A common AI adoption anti-pattern is when organizations pursue AI initiatives simply to appear innovative or keep up with trends, without a clear understanding of the business value they aim to achieve. This technology-first mindset often leads to solutions in search of a problem, where AI is applied to areas that don’t need it or where traditional methods would suffice. As a result, projects struggle to gain traction, fail to deliver measurable impact, and drain valuable time and resources. Without a focus on tangible outcomes—such as improving efficiency, enhancing customer experience, or reducing costs—AI becomes a costly experiment rather than a strategic asset. Sustainable AI adoption must be driven by business needs, not hype.

Consequences

- Low user adoption

- Lack of integration with existing workflows

- Failure to solve real business problems

How to Avoid

- Prioritize business needs and user experience.

- Conduct user research and involve stakeholders early in the process.

- Ensure seamless integration with existing systems.

Ignoring Data Quality and Governance

Ignoring data quality and governance is a critical anti-pattern in AI adoption that can severely undermine the effectiveness of any AI initiative. AI models are only as good as the data they are trained on—poor quality, incomplete, or biased data can lead to inaccurate insights, unreliable predictions, and potentially harmful decisions. Additionally, a lack of data governance can expose organizations to regulatory non-compliance, data privacy breaches, and ethical risks. When data standards, lineage, and access controls are not clearly defined, it becomes difficult to ensure trust, transparency, and accountability in AI systems. To build reliable and responsible AI, organizations must treat data as a strategic asset—establishing strong governance frameworks, maintaining high-quality datasets, and ensuring that data usage aligns with business, legal, and ethical standards.

Consequences

- Inaccurate or biased outputs

- Compliance issues

- Security risks

How to Avoid

- Establish robust data governance policies.

- Ensure data quality and validation.

- Implement data security measures.

Underestimating Change Management

Another key anti-pattern in AI adoption is the lack of effective change management. Introducing AI into an organization is not just a technical shift—it fundamentally impacts workflows, roles, and decision-making processes. Yet many organizations underestimate the cultural and operational changes required for successful AI integration. Without clear communication, training, and stakeholder engagement, employees may resist new AI-driven processes, fear job displacement, or lack the skills to effectively collaborate with AI systems. This resistance can stall adoption, reduce productivity, and ultimately lead to project failure. Successful AI transformation requires a structured change management approach that includes leadership alignment, user education, ongoing support, and a clear vision for how AI will enhance—not replace—human contributions.

Consequences

- Resistance to adoption

- Disruption of workflows

- Lack of training and support

How to Avoid

- Develop a change management plan.

- Provide training and support to employees.

- Communicate the benefits of Gen AI clearly.

Overlooking Ethical Considerations

Neglecting the ethical implications of generative AI is a significant anti-pattern that can lead to serious reputational, legal, and societal consequences. Generative AI systems have the power to create highly realistic content—from text to images to audio—which, if misused or left unchecked, can contribute to misinformation, bias reinforcement, intellectual property violations, or the erosion of user trust. Organizations that deploy generative AI without clear ethical guidelines risk inadvertently generating harmful outputs or amplifying existing inequalities. Moreover, the lack of transparency around how these models generate content and the data they are trained on further complicates accountability. Responsible adoption of generative AI requires proactive steps to ensure fairness, transparency, and safety—including human oversight, ethical review processes, content filtering, and continuous monitoring for unintended consequences. Ethics cannot be an afterthought; it must be embedded into the AI development and deployment lifecycle from the start.

Consequences

- Reputational damage

- Legal issues

- Erosion of trust

How to Avoid

- Establish ethical guidelines and principles.

- Conduct regular ethical reviews.

- Ensure transparency and accountability.

Expecting Instant Results

Having unrealistic expectations about the speed and ease of generative AI implementation is a common anti-pattern that often leads to disappointment and project failure. Many organizations assume that deploying generative AI is a plug-and-play process, expecting immediate results without fully understanding the complexity involved. In reality, successful implementation requires significant time and effort—from aligning AI capabilities with business goals, ensuring data readiness, managing infrastructure, to training and fine-tuning models for specific use cases. Overlooking these complexities can result in underperforming solutions, user frustration, and unmet ROI expectations. Moreover, integrating generative AI into existing workflows, ensuring compliance, and managing change across teams all add to the implementation challenge. To avoid this pitfall, organizations must approach generative AI with a realistic timeline, cross-functional collaboration, and a phased strategy focused on learning, iteration, and long-term value creation.

Consequences

- Frustration and discouragement

- Premature abandonment of projects

- Missed long-term opportunities

How to Avoid

- Set realistic timelines and milestones.

- Plan for iterative development and continuous improvement.

- Focus on long-term value rather than short-term gains.

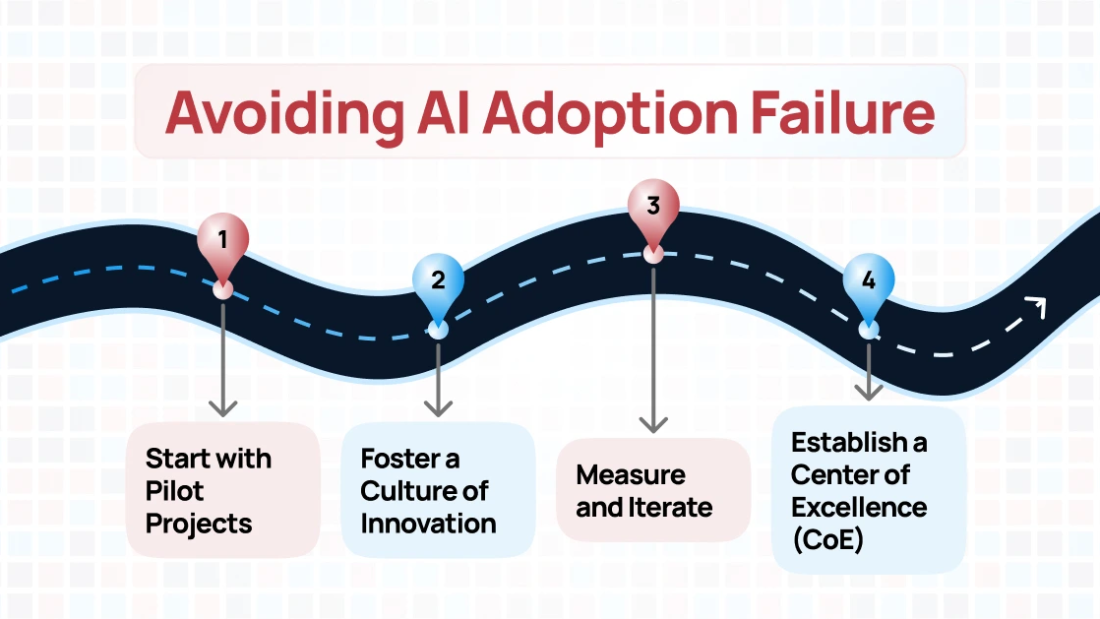

Best Practices for Successful Gen AI Adoption

Establish a Center of Excellence (CoE)

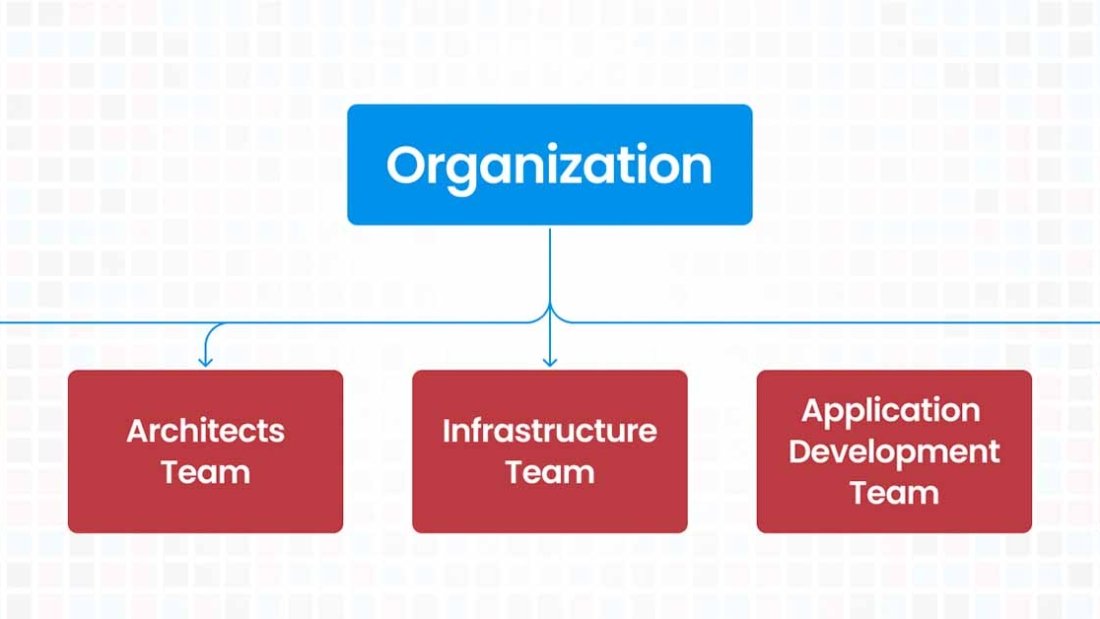

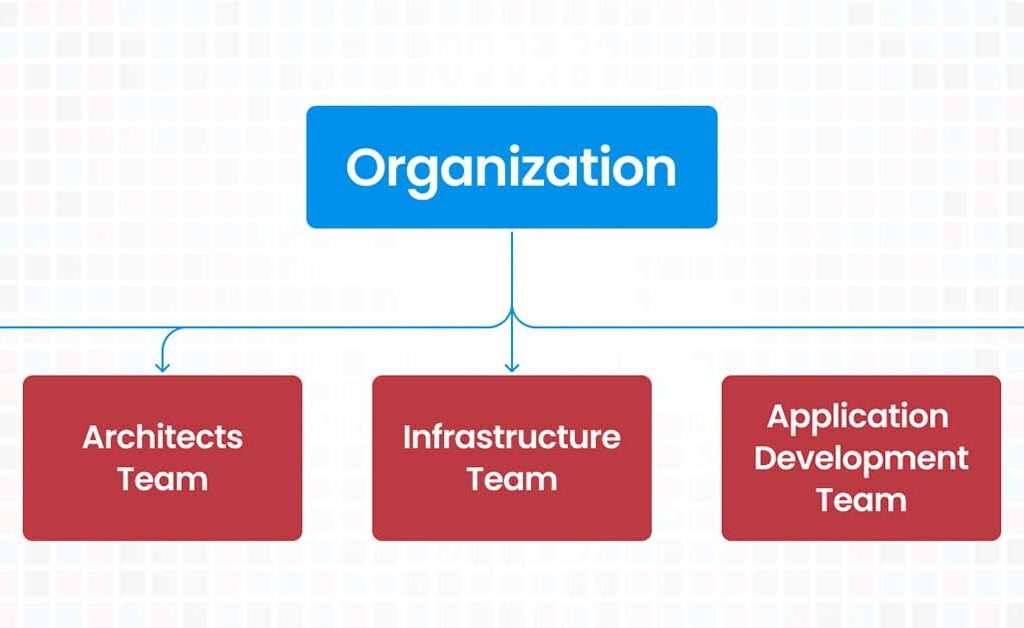

Creating a dedicated team to oversee and guide generative AI initiatives is essential for ensuring strategic alignment, accountability, and sustainable success. This team should bring together cross-functional expertise—including prompt-engineers, domain experts in legal, compliance, and HR professionals—to collaboratively drive AI adoption across departments. Their role is to define use cases, set ethical and governance standards, monitor performance, manage risks, and ensure that AI efforts are aligned with business objectives. Without a centralized team, AI initiatives can become fragmented, duplicative, or misaligned with organizational priorities. A focused, empowered team serves as the foundation for responsible and effective generative AI deployment—bridging the gap between innovation and enterprise readiness.

CloudKitect AI Command Center empowers organizations with intuitive builder tools that streamline the creation of both simple and complex AI assistants and agents that are deeply ingrained into organizations brand—eliminating the need for deep technical expertise. With drag-and-drop workflows, pre-built templates, and seamless integration with enterprise data, teams can rapidly prototype, customize, and deploy agents that align with their specific business needs.

Benefits

- Centralized expertise

- Standardized processes

- Improved collaboration

Start with Pilot Projects

Beginning with small-scale pilot projects is a practical and strategic approach to adopting generative AI, allowing organizations to test and refine their strategies before scaling. These pilots serve as controlled environments where teams can validate use cases, assess data readiness, evaluate model performance, and uncover potential challenges—technical, ethical, or operational—early in the process. By starting small, organizations minimize risk, control costs, and gather valuable feedback from users and stakeholders. Pilots also help build internal confidence and organizational buy-in, showcasing tangible results that support broader adoption. Importantly, they provide an opportunity to iterate on governance frameworks, compliance requirements, and integration pathways, ensuring that larger deployments are more predictable, secure, and aligned with business goals. In essence, small-scale pilots turn AI ambition into actionable insight, laying the groundwork for responsible and scalable implementation.

CloudKitect enables organizations to build and deploy end-to-end AI platforms directly within their own cloud accounts, ensuring full data control, security, and compliance. By automating infrastructure setup, agent deployment, and governance, CloudKitect accelerates time to value—helping teams go from concept to production in less than a week and cost-effectively.

Benefits

- Reduced risk

- Valuable insights

- Demonstrated value

Foster a Culture of Innovation

Encouraging experimentation and learning is vital for unlocking the full potential of generative AI within an organization. Fostering a culture of experimentation means giving teams the freedom to explore new ideas, test unconventional approaches, and learn from failures without fear of blame. In the fast-evolving world of AI, success often comes from iterative discovery—trying out different prompts, fine-tuning models, or applying AI to diverse business scenarios to find what truly works. Organizations that promote a growth mindset and support hands-on learning are more likely to identify high-impact use cases and develop innovative, resilient solutions. This culture should be backed by clear leadership support, accessible tools, and safe environments—such as sandboxes or innovation labs—where teams can experiment with low risk. Ultimately, a culture of experimentation drives continuous improvement, accelerates AI maturity, and transforms generative AI from a buzzword into a sustained source of value and competitive advantage.

With CloudKitect’s AI Command Center and its intuitive, user-friendly interface, teams can start experimenting and innovating immediately—without the burden of a steep learning curve.

Benefits

- Increased creativity

- Faster adaptation

- Continuous improvement

Measure and Iterate

Tracking key metrics and making adjustments based on feedback and results is essential for ensuring the long-term success of generative AI initiatives. Without measurable indicators of performance, it becomes difficult to determine whether an AI solution is delivering real business value or aligning with strategic goals. Organizations should define clear success metrics—such as accuracy, user engagement, cost savings, time-to-completion, or compliance adherence—tailored to each use case. Equally important is collecting feedback from end users, stakeholders, and technical teams to understand what’s working, what’s not, and where improvements are needed. By continuously monitoring these inputs, organizations can identify gaps, adapt their models, refine workflows, and optimize performance over time. This data-driven, feedback-informed approach transforms AI implementation into an ongoing cycle of learning and refinement, ensuring solutions remain effective, relevant, and aligned with evolving business needs.

The AI Command Center includes robust feedback tools that enable builders to refine their assistants and agents based on real user input.

Benefits

- Data-driven decision-making

- Improved outcomes

- Enhanced ROI

Conclusion

Avoiding these anti-patterns and implementing best practices is crucial for successful Gen AI adoption. By focusing on strategy, data quality, change management, and ethical considerations, enterprises can unlock the full potential of Gen AI and drive meaningful business value.

Kickstart Your AI Success Journey – Talk to Our Experts!

Search Blog

About us

CloudKitect revolutionizes the way technology startups adopt cloud computing by providing innovative, secure, and cost-effective turnkey AI solution that fast-tracks the digital transformation. CloudKitect offers Cloud Architect as a Service.

Related Resources

How to Avoid AI Adoption Failure: Spotting and Avoiding Anti-Patterns

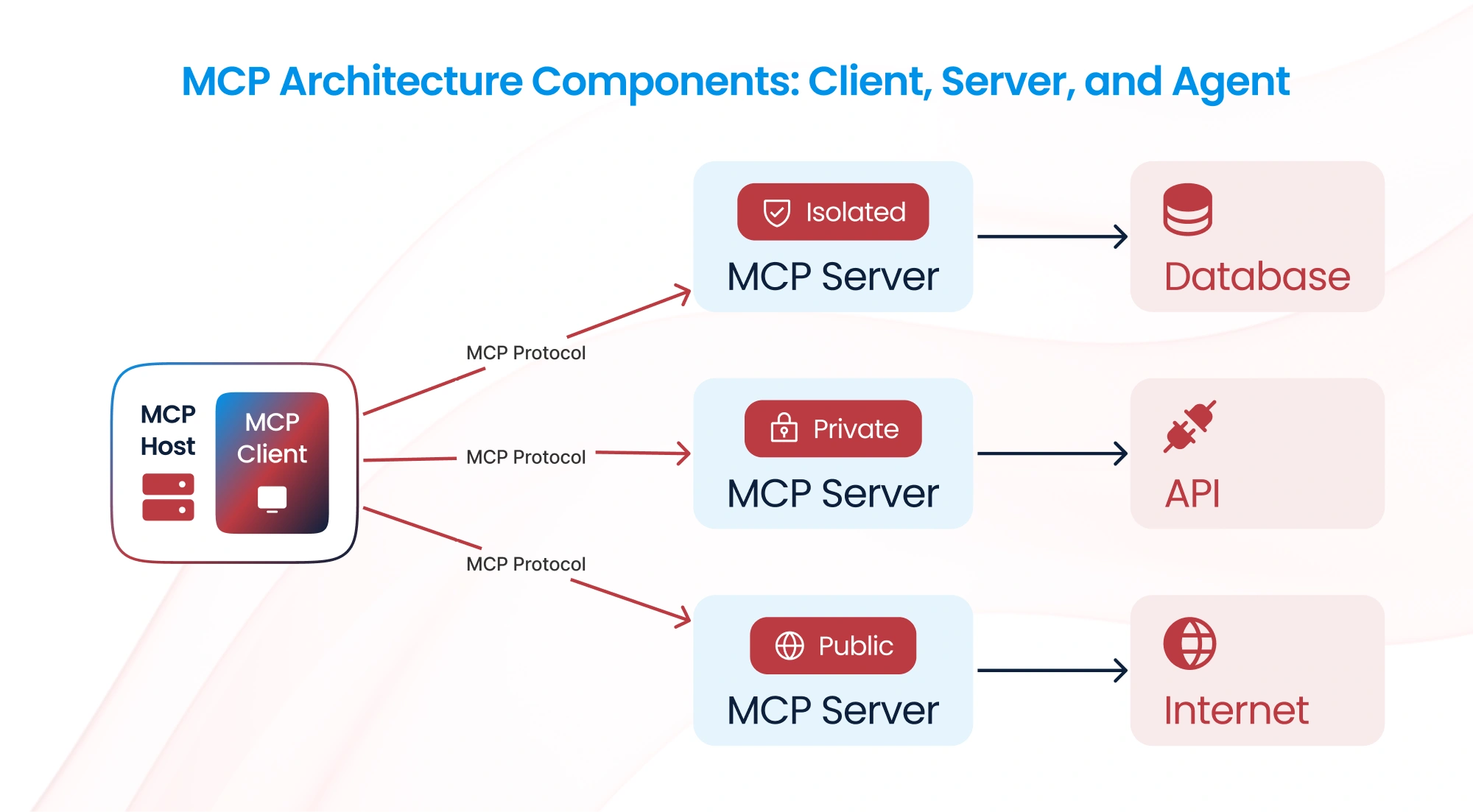

Why MCP Servers Are Critical for Agentic AI —and How to Deploy Them Faster with CloudKitect