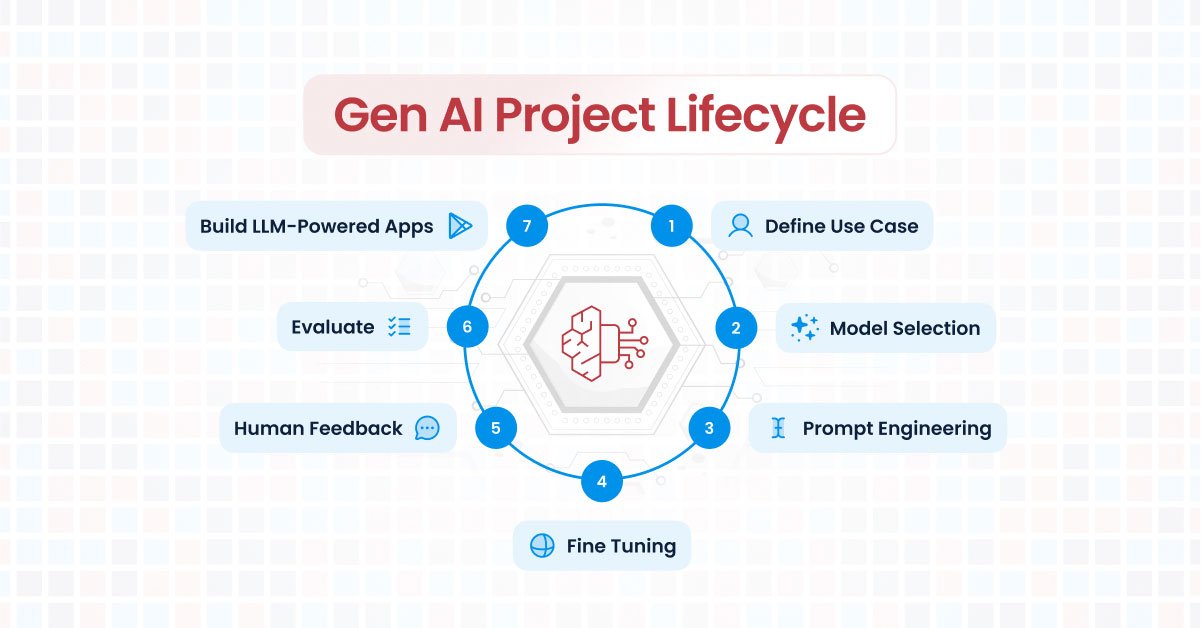

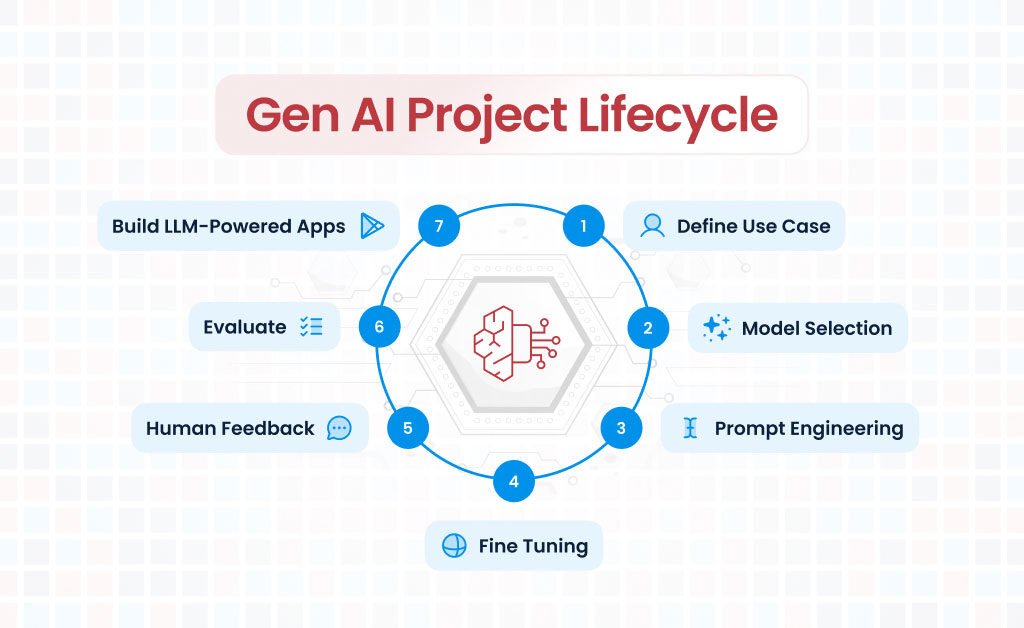

Generative AI has revolutionized the way businesses operate, offering immense potential to transform both front-office and back-office functions. Whether it’s automating content to writing for marketing, summarizing documents, translating languages, or retrieving information, generative AI applications can significantly enhance efficiency and productivity. This blog post outlines the lifecycle of a generative AI project, from defining the use case to integrating the AI into your applications.

Define Use Case

The first step in any generative AI project is to clearly define the use case. What do you want to achieve with AI? This decision will drive the entire project lifecycle and ensure that the AI implementation aligns with your business goals. Common applications include:

Front Office Applications

Content Writing for Marketing

Automating the creation of marketing content can save time and ensure consistency across all communication channels.

Information Retrieval

Quickly fetching relevant information from vast datasets can enhance customer service and support operations.

Back Office Applications

Document Summarizations

Automatically summarizing long documents can help in quickly understanding key points and making informed decisions.

Translations

Converting documents from one language to another can facilitate global operations and communication.

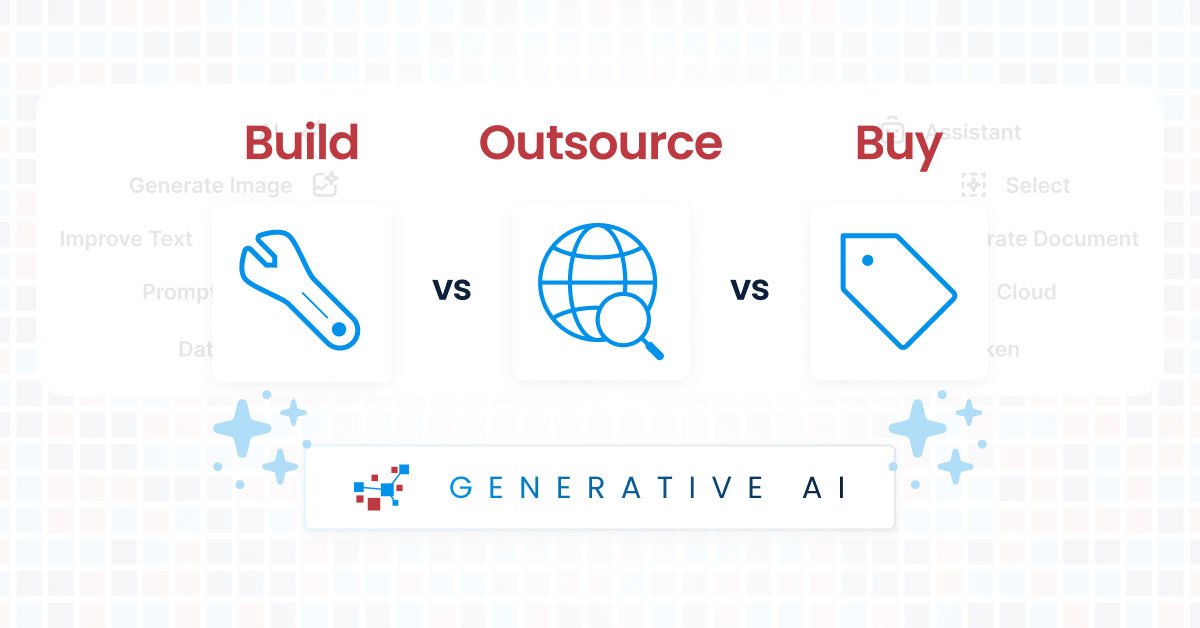

Choose Model

Once the use case is defined, the next step is to choose the appropriate model. You have two primary options:

Train Your Own Model

This approach offers more control and customization but requires significant resources, including data, computational power, and expertise.

Use an Existing Base Model

Leveraging pre-trained models can save time and resources. Different models are suited for different tasks, so it’s essential to choose one that aligns with your specific needs. For example, models like GPT-4 are versatile and can handle a variety of tasks, from text generation to translation.

Prompt Engineering

Prompt engineering is a crucial step in ensuring that the AI provides relevant and accurate outputs. This involves using in-context learning techniques, such as:

Zero-shot Learning

The model makes predictions based on general knowledge without any specific examples.

One-shot Learning

The model is given one example to make predictions.

Few-shot Learning

The model is provided with a few examples to improve its predictions.

By carefully designing prompts, you can guide the AI to produce outputs that are contextually appropriate and aligned with your requirements.

Fine Tuning

Fine-tuning the model involves optimizing its output using various parameters, such as:

Temperature

Controls the randomness of the output. Lower values make the output more deterministic, while higher values increase creativity.

Top-K Sampling

Limits the sampling pool to the top K predictions, ensuring more relevant outputs.

Top-P (Nucleus) Sampling

Selects from the smallest set of predictions whose cumulative probability exceeds a threshold P, balancing diversity and relevance.

Fine-tuning helps in refining the model’s performance and ensuring that it meets your specific needs.

Human Feedback

Incorporating human feedback is essential for improving the AI’s performance. Have humans evaluate the outputs, iterate on prompt engineering, and fine-tune the parameters to ensure that the model produces the desired results. This step helps in minimizing errors and hallucinations, where the model generates incorrect or nonsensical outputs.

Evaluate with Sample Data

Before full deployment, it’s critical to evaluate the model with new sample data. This ensures that the model performs well in real-world scenarios and can handle variations in the input data. Thorough testing helps in identifying and addressing any potential issues before they impact your operations.

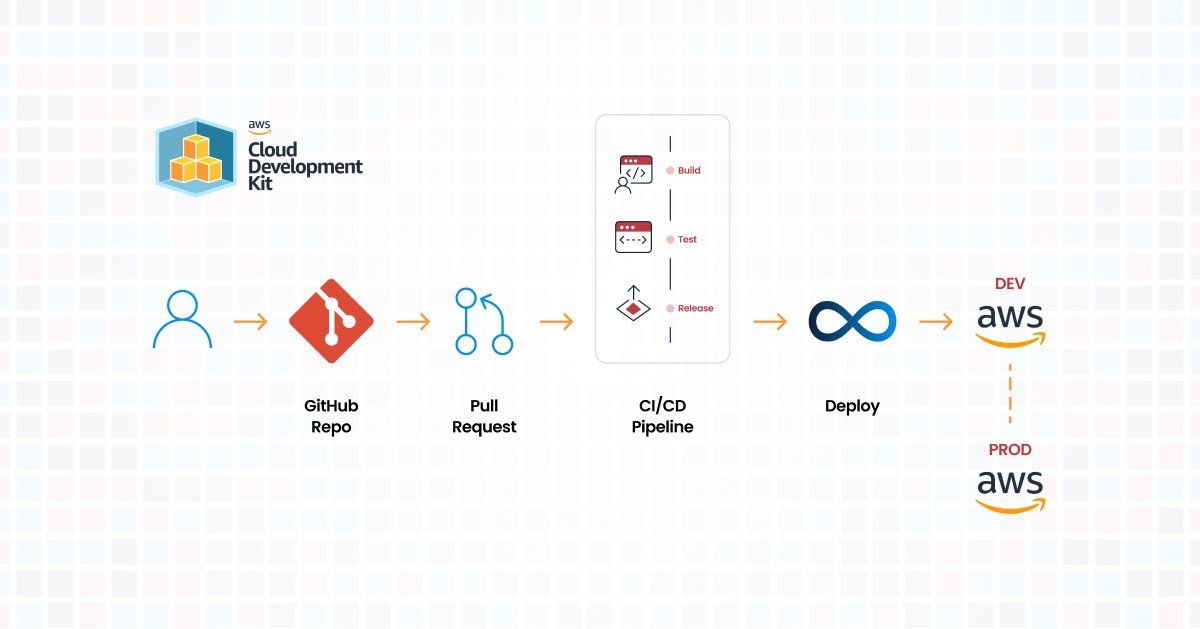

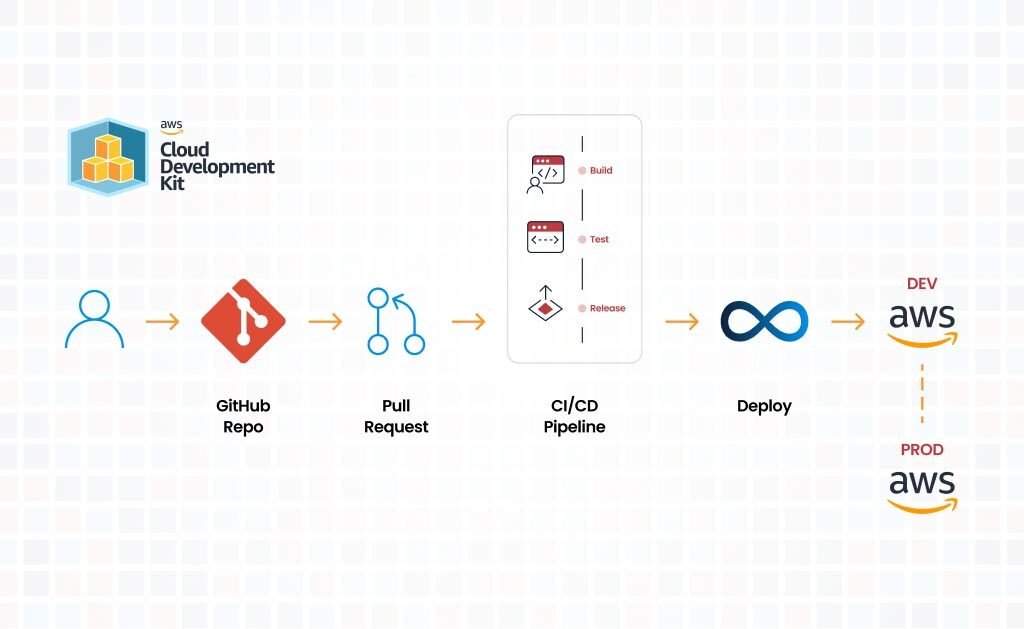

Build LLM-Powered Applications Using APIs

The final step is to integrate the AI model with your applications using APIs. Ensure that your implementation makes the best use of computational resources and is scalable to handle increased loads. Proper integration allows you to leverage the full potential of generative AI, driving efficiency and innovation in your business processes.

Conclusion

Embarking on a generative AI project requires careful planning and execution. By following the steps outlined in this lifecycle—defining the use case, choosing the right model, prompt engineering, fine-tuning, incorporating human feedback, evaluating with sample data, and building applications—you can effectively use the power of AI to achieve your business goals. CloudKitect has taken care of the complex task of building and optimizing a generative AI platform on AWS. Now, you only need to integrate it into your environment using user-friendly and intuitive REST APIs.

Talk to Our Cloud/AI Experts

Search Blog

About us

CloudKitect revolutionizes the way technology startups adopt cloud computing by providing innovative, secure, and cost-effective turnkey AI solution that fast-tracks the digital transformation. CloudKitect offers Cloud Architect as a Service.

Related Resources

Generative AI Project Lifecycle: A Comprehensive Guide

Unlocking Data Insights: Chat with Your Data | PDF and Beyond