The recent MIT study’s findings are a wake-up call for enterprises pouring millions into AI initiatives without seeing tangible results. But the 95% failure rate isn’t inevitable—it’s a symptom of flying blind without proper measurement and accountability.

The Real Problem: AI Without ROI Visibility

While companies focus on top of the line AI models and complex integrations, they’re missing the fundamental question: “Is this actually saving us time and money?” The study reveals that organizations succeed when they solve targeted problems with specialized solutions—but only if they can measure and optimize their impact.

ROI Visibility from Day One

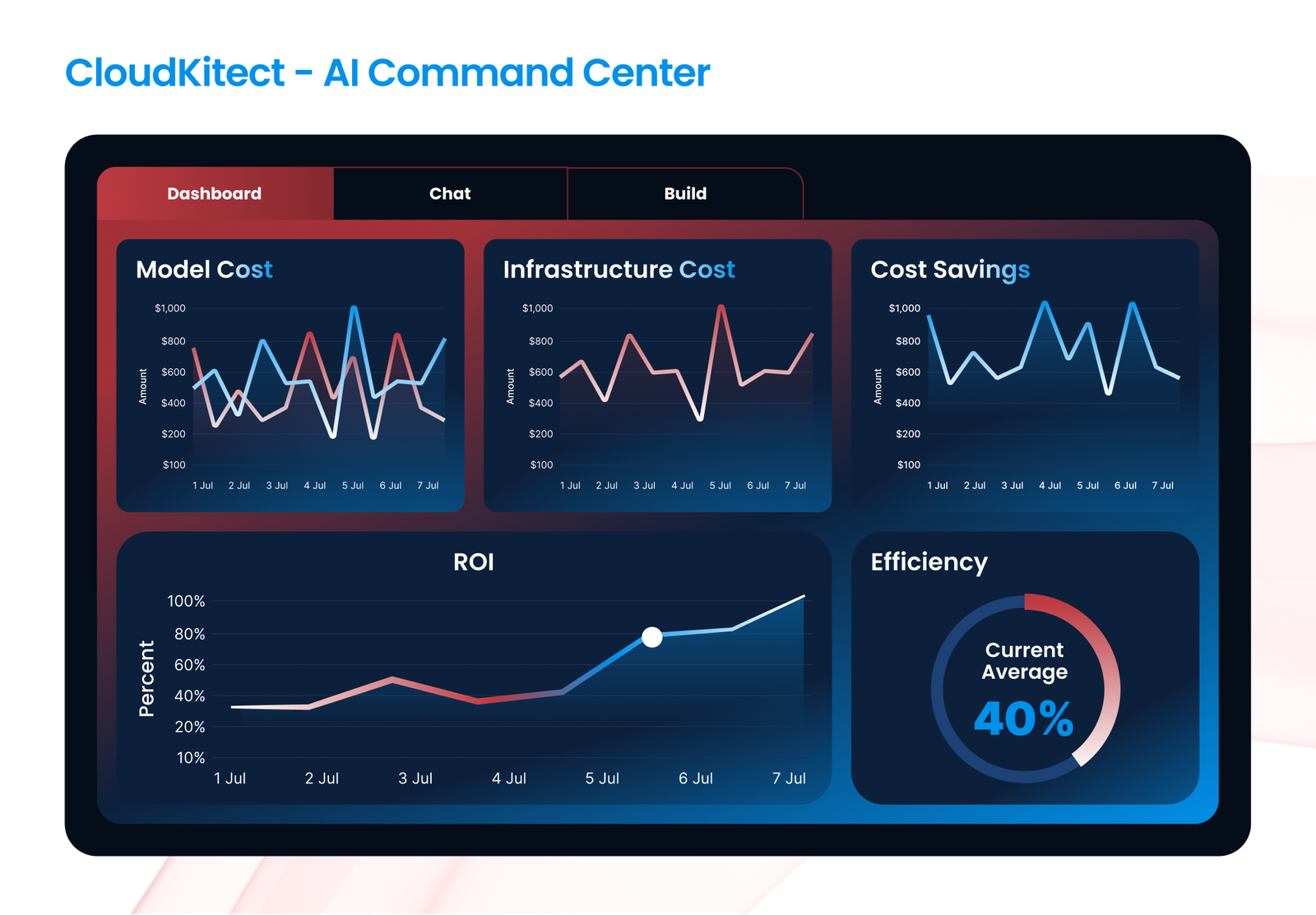

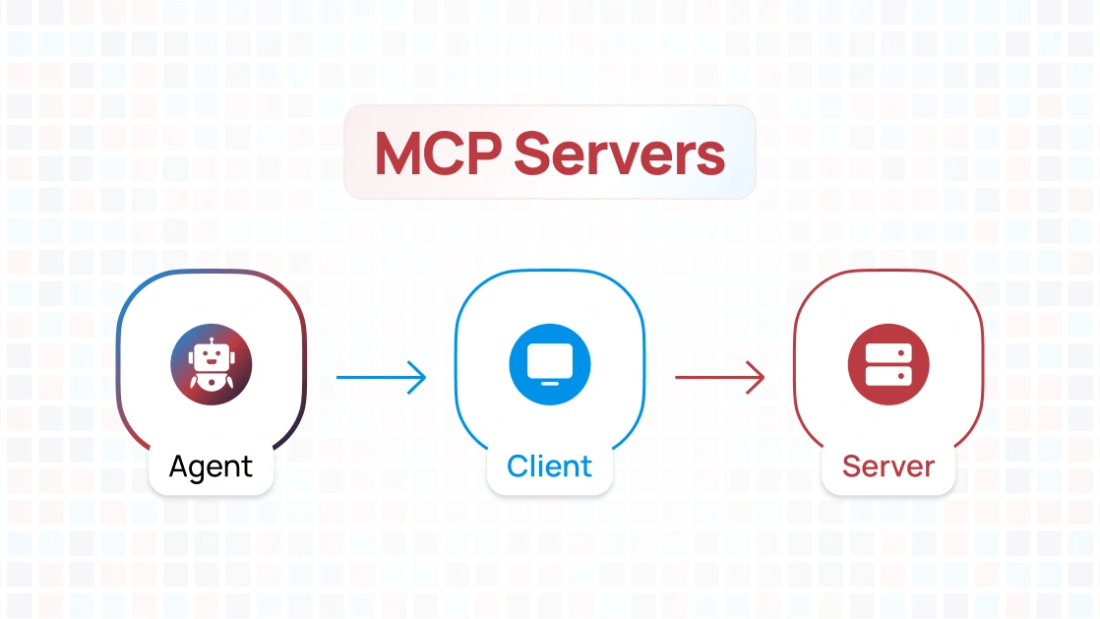

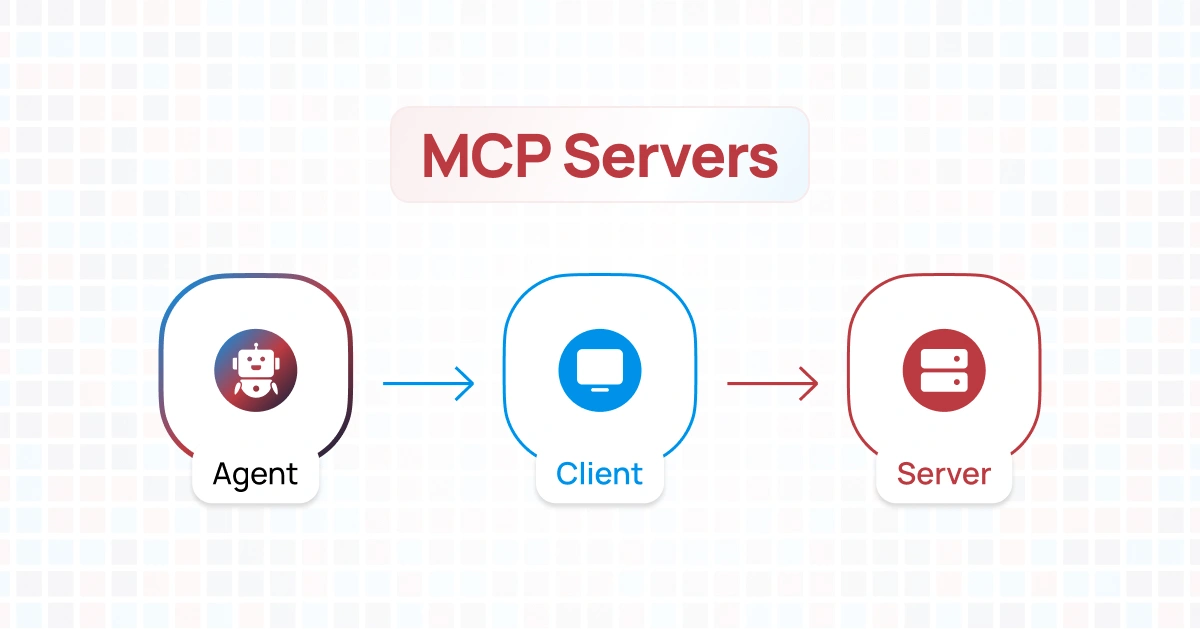

This is where CloudKitect transforms the equation. Working in partnership with you, we help you design an AI strategy specific to your organization, with measurable ROI at its core. Once implemented, our platform delivers immediate ROI visibility through a Command Center that measures critical metrics:

- Real-time cost tracking across all AI initiatives

- Productivity gains measured against baseline performance

- Revenue attribution from AI-enhanced processes

- Department-by-department impact analysis

Unlike traditional solutions that take months to implement, CloudKitect gets you operational within a day. Our pre-built connectors integrate with your existing AI tools, cloud infrastructure, and business systems to start capturing ROI metrics immediately. All deployed securely in your AWS cloud, keeping you in full control of your data.

Focus on What Actually Drives Value. The MIT study shows that successful AI implementations target specific operational improvements. CloudKitect’s analytics help you identify and double down on high-impact use cases while eliminating spend on underperforming initiatives.

The CloudKitect Difference: From AI Expense to Strategic Asset

While 95% of companies struggle with AI ROI, CloudKitect helps clients gain the visibility and control needed to join the successful 5%. Our platform transforms AI from a leap of faith into a measurable, optimizable business driver.

Don’t let your AI investments become another statistic. With CloudKitect, you’ll know within a few days whether your AI initiatives are moving the needle—and have the insights to ensure they continue delivering results.

Ready to turn your AI spend into measurable business value? Get in touch with us at info@cloudkitect.com to get started tomorrow.

The CloudKitect Promise:

We will help you build your specialized AI agents, and we’re so confident they will produce the ROI you’re expecting that if you don’t achieve your projected returns within the first 30 days (opt-out period), we’ll provide a full refund of your implementation fees.

Kickstart Your AI Success Journey – Explore AI Command Center Today!

Search Blog

About us

CloudKitect revolutionizes the way technology startups adopt cloud computing by providing innovative, secure, and cost-effective turnkey AI solution that fast-tracks the digital transformation. CloudKitect offers Cloud Architect as a Service.

Related Resources

Turning AI Investment into Measurable Returns: The CloudKitect Advantage

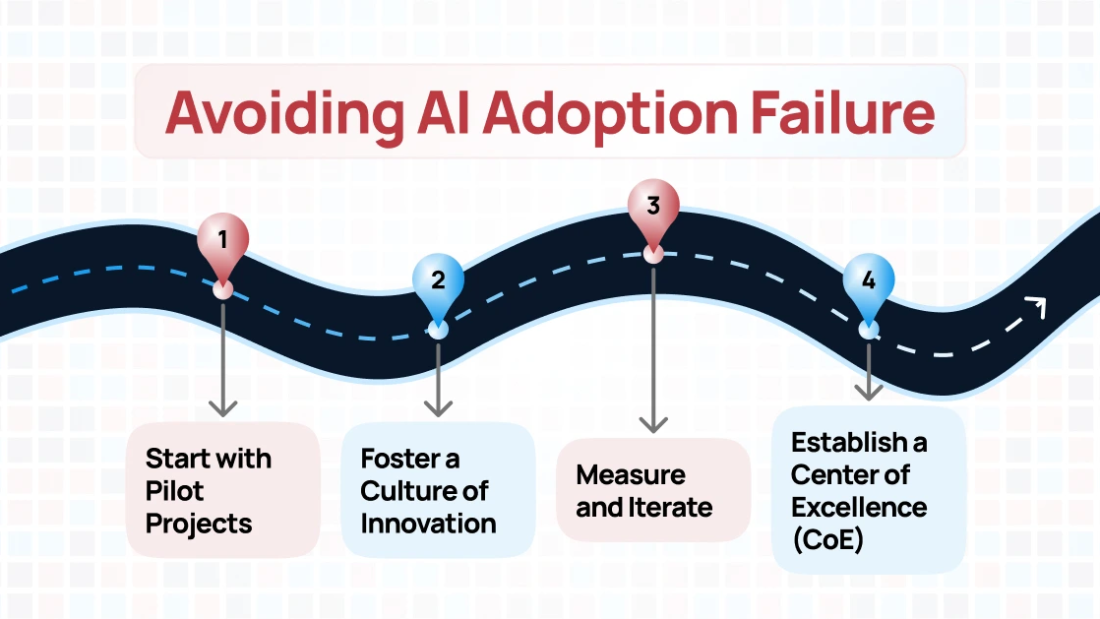

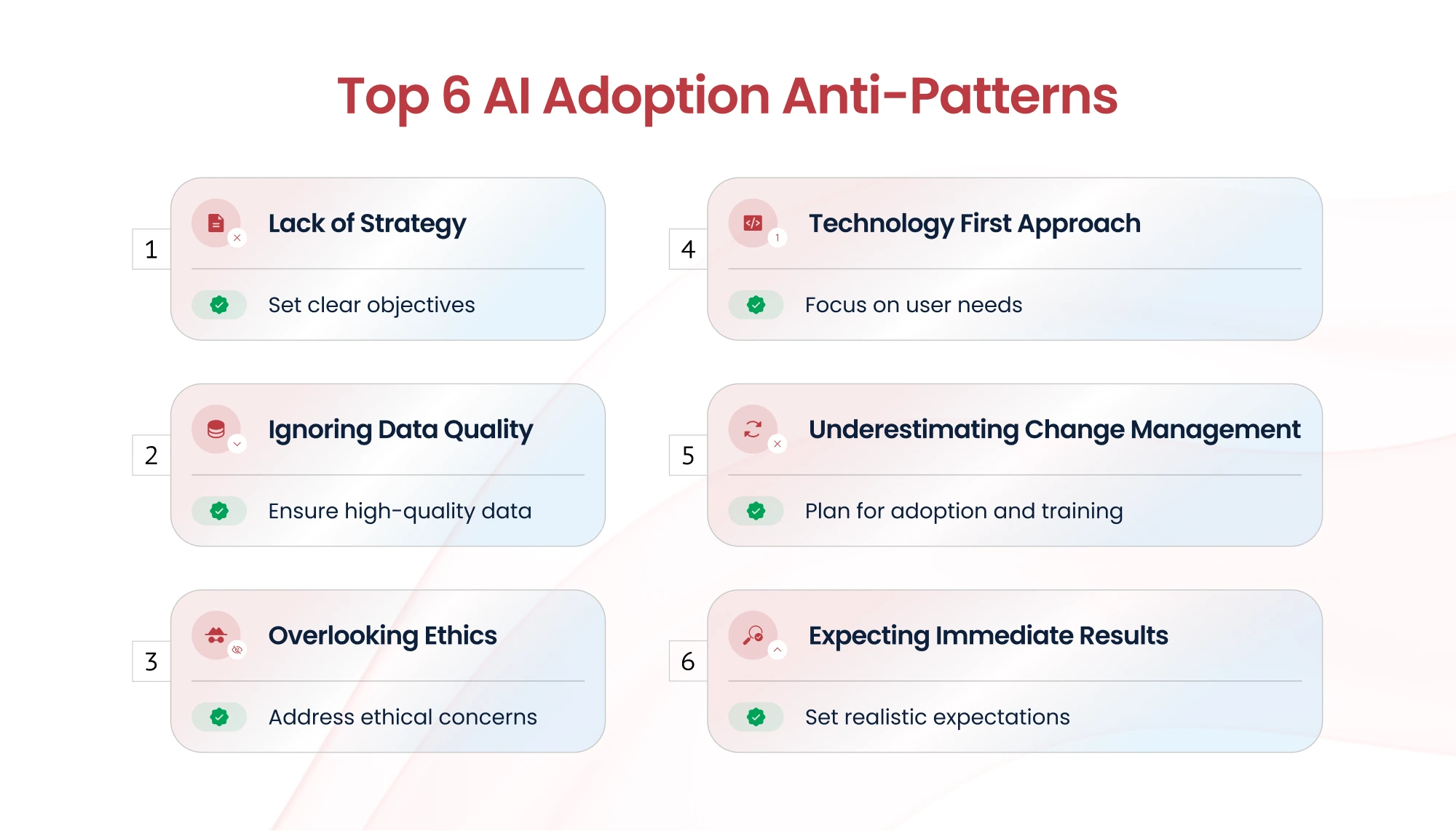

How to Avoid AI Adoption Failure: Spotting and Avoiding Anti-Patterns