In the evolving landscape of artificial intelligence (AI), one term that has been gaining significant attention is “Prompt Engineering.” As generative AI models become more sophisticated, the importance of prompt engineering in leveraging their capabilities cannot be overstated. But what exactly is prompt engineering, and why is it so important? Let’s dive in to understand this aspect of AI.

Understanding Generative AI

Before we delve into prompt engineering, it’s essential to grasp the basics of generative AI. Generative AI refers to a subset of artificial intelligence that focuses on creating new content—such as text, images, music, and more—based on the data it has been trained on. Prominent examples of generative AI include language models like OpenAI’s GPT (Generative Pre-trained Transformer), which can produce coherent and contextually relevant text based on the input it receives.

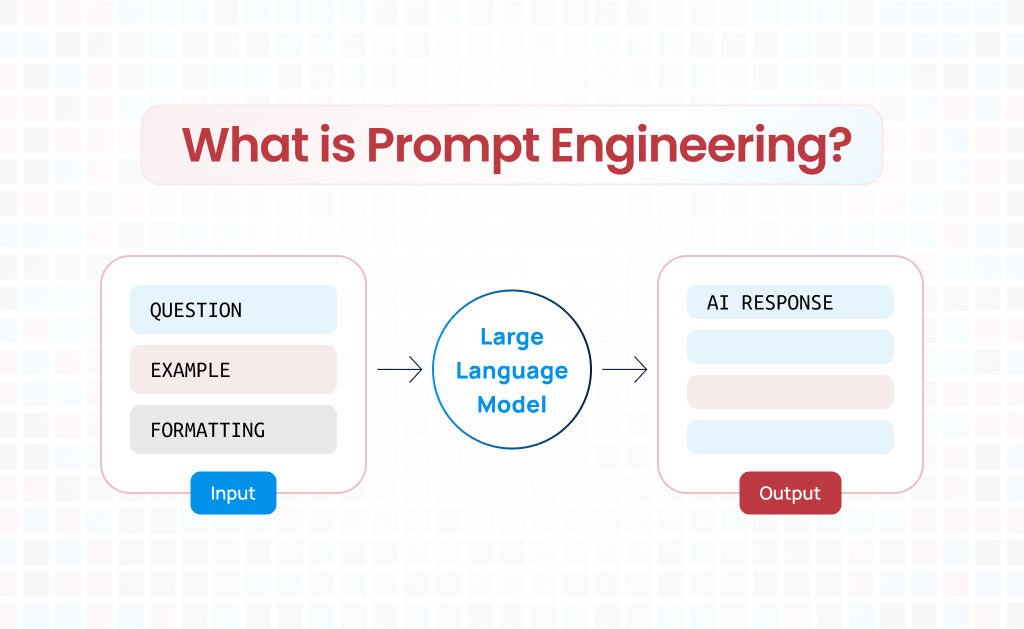

What is Prompt Engineering?

Prompt engineering involves designing and writing effective prompts to guide generative AI models to produce the desired output. Think of it as the art and science of asking the right questions or providing the right cues to an AI model to get the best possible results.

A “prompt” is the input or the initial text given to a generative AI model to start the content generation process. For instance, if you want an AI to write a story about a superhero, your prompt might be, “Be a story teller and write a comprehensive story about superhero named Astra who had incredible power and how he used his powers to help his community”.

Prompt engineering goes beyond just providing any input; it involves carefully constructing prompts to maximize the relevance, coherence, and creativity of the generated output. This process can significantly influence the quality of the results, making it a critical skill for anyone working with generative AI.

The Importance of Prompt Engineering

Now that we have a basic understanding of what prompt engineering is, let’s explore why it is so useful, especially in the context of generative AI models.

Enhancing Output Quality

One of the primary reasons prompt engineering is essential is that it directly impacts the quality of the AI’s output. A well-crafted prompt can lead to more accurate, creative, and contextually appropriate responses. Conversely, a poorly designed prompt can result in irrelevant, nonsensical, or low-quality outputs. By refining prompts, users can utilize the full potential of generative AI models.

Control and Direction

Generative AI models are incredibly versatile, capable of producing a wide range of content. However, without proper guidance, their outputs can be unpredictable. Prompt engineering allows users to steer the AI in a specific direction, ensuring that the generated content aligns with the desired objectives. Whether it’s writing a technical article, generating marketing copy, or creating fictional stories, prompt engineering provides the control needed to achieve targeted results.

Efficiency and Productivity

In a professional setting, efficiency and productivity are essential. Prompt engineering can save time and resources by reducing the need for extensive post-editing and refinement of AI-generated content. By providing clear and precise prompts, users can obtain high-quality outputs more quickly, streamlining workflows and enhancing overall productivity.

Customization and Personalization

Prompt engineering enables the customization and personalization of AI-generated content to suit specific needs and preferences. For instance, businesses can tailor prompts to reflect their brand voice and messaging, while educators can design prompts to create educational materials that resonate with their students. This level of customization enhances the relevance and effectiveness of the generated content.

Exploration and Creativity

Generative AI models are powerful tools for exploration and creativity. Prompt engineering allows users to experiment with different prompts to discover new ideas, perspectives, and solutions. By varying the inputs, users can uncover unexpected and innovative outputs, fostering creativity and inspiring fresh approaches to problem-solving.

Examples of Prompt Engineering in Action

To illustrate the impact of prompt engineering, let’s look at a few examples:

Content Creation:

For a blog post on the benefits of a healthy diet, a prompt like “Write an article about the benefits of a healthy diet, focusing on the impact on mental health and physical well-being” will yield more targeted content than a vague prompt like “Write about a healthy diet.”

Customer Support:

In a customer support scenario, a prompt such as “Provide a step-by-step guide to troubleshoot a slow internet connection” can help generate a detailed and helpful response, compared to a general prompt like “Help with internet issues.”

Creative Writing:

For a short story about a detective, a prompt like “Write a mystery story set in Victorian London, featuring a brilliant detective who solves crimes using unusual methods” will produce a more engaging narrative than simply prompting “Write a detective story.”

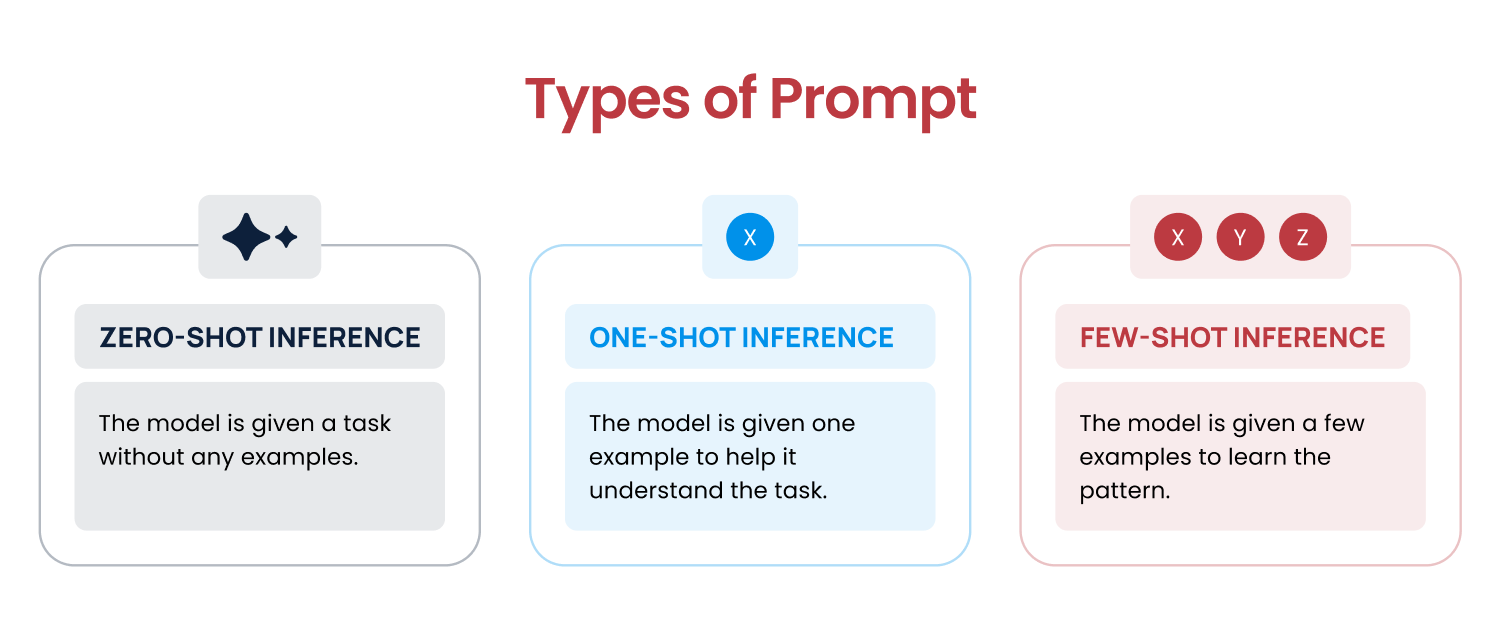

Types of Prompts:

As we see in the examples above, prompts with more context produce better results. This brings us to the concepts of zero-shot, one-shot, and few-shot inference, which are approaches to providing context and examples to the AI model. Let’s explore these types and how they impact the performance of generative AI models.

Zero-Shot Inference

Zero-shot inference refers to the scenario where the AI model is asked to perform a task without any specific examples or training on that particular task within the prompt. The model relies solely on its pre-existing knowledge and understanding of language patterns to generate a response.

Example Prompt for Zero-Shot Inference:

- Task: Summarize a paragraph

- Prompt: “Summarize the following paragraph: The quick brown fox jumps over the lazy dog. The fox is agile and fast, while the dog is slow and sleepy.”

Explanation: In this example, the AI is directly asked to summarize the paragraph without being given any specific examples of how to summarize. The model uses its general understanding of summarization to generate the output.

One-Shot Inference

One-shot inference involves providing the AI model with one example of the task to guide its response. This single example helps the model understand what is expected, improving the relevance and accuracy of the output.

Example Prompt for One-Shot Inference:

- Task: Translate English to French

- Prompt: “Translate the following sentence to French: ‘The cat sits on the mat.’ Example: ‘The dog barks loudly.’ translates to ‘Le chien aboie fort.'”

Explanation: Here, the AI model is given one example of an English sentence and its French translation. This example helps the model understand how to approach the task of translation for the new sentence.

Few-Shot Inference

Few-shot inference extends the concept of one-shot inference by providing the AI model with several examples of the task. This approach gives the model more context and a better understanding of the expected output, leading to even more accurate and relevant results.

Example Prompt for Few-Shot Inference:

- Task: Generate a short poem

- Prompt: “Create a short poem about nature. Examples: ‘The sun sets in the west, Painting the sky with colors best.’ ‘In the forest deep and green, Nature’s beauty can be seen.'”

Explanation: In this case, the AI model is provided with multiple examples of short poems about nature. These examples help the model grasp the style, structure, and theme expected in the generated poem.

Importance of Different Prompt Types

Each type of prompt—zero-shot, one-shot, and few-shot—inference has its own strengths and use cases:

1- Zero-Shot Inference:

- Strengths: Useful for quick and broad tasks where specific examples are not necessary. It leverages the model’s general knowledge and versatility.

- Use Cases: Quick queries, general information retrieval, basic tasks like summarization or translation without specific examples.

1- One-Shot Inference:

- Strengths: Provides a balance between minimal context and improved performance. One example helps guide the model effectively without overwhelming it.

- Use Cases: Simple tasks that benefit from a single guiding example, such as straightforward translations, basic text generation, or single-instance tasks.

1- Few-Shot Inference:

- Strengths: Offers the highest level of context and accuracy. Multiple examples provide a clear pattern for the model to follow, enhancing the quality of the output.

- Use Cases: Complex tasks requiring nuanced understanding, creative content generation, tasks involving specific styles or formats, and specialized problem-solving.

Conclusion

Prompt engineering is a vital skill of using generative AI. It empowers users to harness the full potential of AI models, enhancing the quality, relevance, and creativity of the generated content. By understanding and applying prompt engineering techniques, individuals and organizations can unlock new levels of efficiency, customization, and innovation in their AI-driven endeavors.

CloudKitect has developed multiple prompt templates tailored to different use cases. If you want to learn how a robust prompt engineering strategy can impact your specific needs, we are here to assist and guide you.

Talk to Our Cloud/AI Experts

Search Blog

About us

CloudKitect revolutionizes the way technology startups adopt cloud computing by providing innovative, secure, and cost-effective turnkey AI solution that fast-tracks the digital transformation. CloudKitect offers Cloud Architect as a Service.